Data Engineering

Organize the data you have

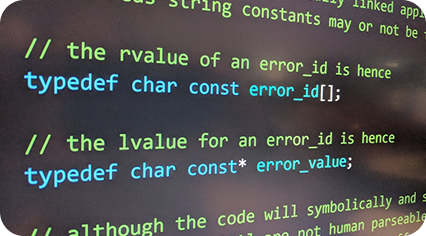

End-to-end bespoke software delivery. Language-agnostic development. Building the future with cutting-edge AI and unparalleled technical expertise.

CodeBlaze enables teams to deploy high-impact AI quickly. We accelerate business growth through tailored AI consulting, engineering, and intelligent agent solutions.

We deliver innovative solutions that elevate your brand and scale with your business, driving impact, strengthening presence, and fueling growth

Click to view detail

Click to view detail

Click to view detail

Click to view detail

Click to view detail

We have completed 50+ projects, solving unique challenges every time.

Over 25+ AI-driven solutions crafted to meet diverse business challenges.

We're proud that 96% of our clients report being satisfied with our work.

Always on. Always ready. Our support team is available 24 hours a day, 7 days a week.

We combine strategy, technology, and change management to help organizations streamline operations.

What's covered with each skill?

Pick the plan that works for you - upgrade anytime as your needs grow.

We deliver custom software that aligns with your goals, boosts efficiency, and drives growth.

Smart enterprise mobile apps: reliable, packed with features, built to scale, and optimized for usability.

Harnessing AI & ML to transform data into action, powering innovation and efficiency.

Strategic, tech-powered solutions to keep your business agile and ready for the digital future.

Cloud migration boosts scalability, flexibility, and efficiency with secure access to data and applications.

Streamlined DevOps for faster delivery, higher reliability, and teams that adapt quickly.

Our Product Development Cycle defines the key stages we use to turn concepts into high-quality, customer-focused products.

We combine innovation and collaboration to deliver user-centered solutions that meet business goals.

We design solutions that align user needs with business goals through research and collaboration.

We build MVPs with core features to validate concepts, gather feedback, reduce risk, and speed up time to market.

We refine products through user insights and testing, ensuring performance, usability, and alignment with evolving needs

We launch with a strategic deployment that ensures seamless integration, strong performance, and readiness to scale.

We offer ongoing support to sustain performance and adapt your product to evolving business and user needs.

We stand out through our strong engineering, industry expertise, and focus on delivering scalable, secure, and user-centric solutions that create measurable impact.

Disruption drains attention. Great tech should reduce mental friction, not add to it.

In the AI era, employees are reluctant to adopt what makes them feel lost, exposed, or replaceable.

Tech means nothing if people can't use it. We design intuitive experiences, provide hands-on support.

Even the smartest tools fail if they feel difficult or complex.

True transformation is felt. Not just in systems, but in culture, wellbeing, and confidence.

We deliver real, measurable value with minimal disruption to your tools, workflows, or culture.

Passionate. Proactive. Expert.

We don’t just deliver projects—we build long-term collaborations grounded in trust, clarity, and shared ambition. These reviews reflect the way we work: transparent, strategic, and fully invested in our client’s success.